Cliff Stamp

BANNED

- Joined

- Oct 5, 1998

- Messages

- 17,562

... but I'm not paid either.

So if there is an increase in sales of the FF knives which leads to a demand for the material do you or do you not make money from it?

No, primarily because I don't agree with the disclaimer.

Repeatability is the main concern and correlation of the data to the actual conclusion i.e., this would be useful for people. That would be the reason for the disclaimer.

I don't disagree with you, but several knife makers do.

Physics agrees with me, having numbers on their side is meaningless. It just means more of them are wrong.

But I haven't been able to understand how you estimated the uncertainty for the cut ratio. That's why I keep asking the question.

It is determined a hundred times or so in the montecarlo fits and an average and standard calculated.

Would you show me how to transform the functions so the advantage of FFD2 is a million percent?

This is highschool algebra, let f1 and f2 be two functions. Now if you are taking a difference then scaling the function will scale the difference :

Diff = C*f1-C*f2=C (f1-f2)

A ratio is no different simply consider the transform g(x)=exp(x), that will induce a huge ratio transform. You have to show that the transform that you use is physically meaningful.

tnelson, as I noted :

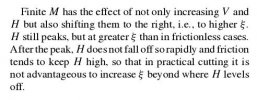

.... If you assume it is wear based then as the edge thickens, under the same force the pressure is reduced so the rate is reduced, thus the 0.5 law.

This is basic differential equations. As noted, you will see this when changing the angle on a blade as the more you grind the longer it takes to remove a similar amount of material because the contact width increases.

However this is just the ideal behavior. In reality at the same time the edge is deforming and chipping which changes the effective thickness. As well the wear rate changes because the edge changes from wear around the matrix to the large carbides coming out.

Now the physics behind these mechanisms has been presented in detail by several of the authors cited. I started with a simple ideal behavior (harmonic oscillator for example) and found that it was sufficient alone to model the behavior.

-Cliff