I completed some statisical analyses on the BF camp knife contents. Overall there were 21 knives tested across 13 testing categories. All scores were graded by the three reviewers with the exception of Food Preparation which was only assessed by Big Mike. Since this category only involved one of three reviewers I omitted the category from my analysis since I was more interested in aspects such as the variation between reviewers and how this contributed to overall scores.

The first test I did was to simply compare the overall score (sum of 12 testing scores excluding food prep results) between the three reviewers. Since Tony Is

THE MAN, I made him the x-axis and I placed Marcelo's and Big Mike's scores on the y-axis of the figure below. Each symbol represents the results of a specific knife (green stars = Marcelo; red circles = Big Mike) as contrasted against Tony's score. The diagnol black line represents a perfect fit. Symbols that fall on this diagnal line have a perfect correspondence between Tony and either Marcelo's or Big Mike's scores.

Basically, there was a very good correlation between the overall score generated by Marcelo and Tony and that generated by Big Mike and Tony. Tony and Marcelo were more often in agreement with one another compared to Tony and Big Mike. There was also quite a bit of disagreement between the reviewers on a few knives. This includes scores provided for C. Bryant, GW Schmidt, Pinault and Mud Creek where a low overall rating by one reviewer had a larger influence to the average score for these knives. In two cases this would not affect the overall ranking of the knife to a great degree. In two other cases this would affect fine scale positioning within the pack of results but would not influence the top performers in the contest.

For stats junkies, linear regression analysis indicated that Tony's performance scores explained (or would be predictive) of 72% of Marcelo's scores (adjusted R2 value) and 60% of Big Mikes Scores. In the two regression analyses, the slope was not significantly different from a value of 1 nor was the constant significantly different from a value of 0. This means that the 1:1 regression line provides an adequate description of this correlation, essentially as good as the best fit least squares regression does. It also implies that our three reviewers were overall of like mindedness (with some exceptions) in their ranking of individual knives.

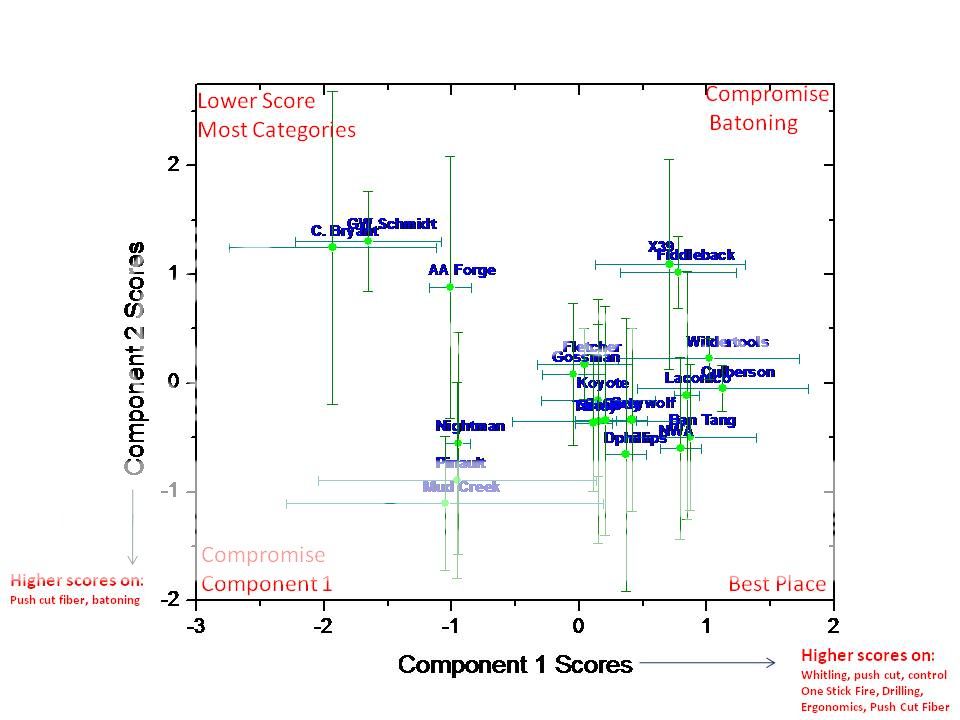

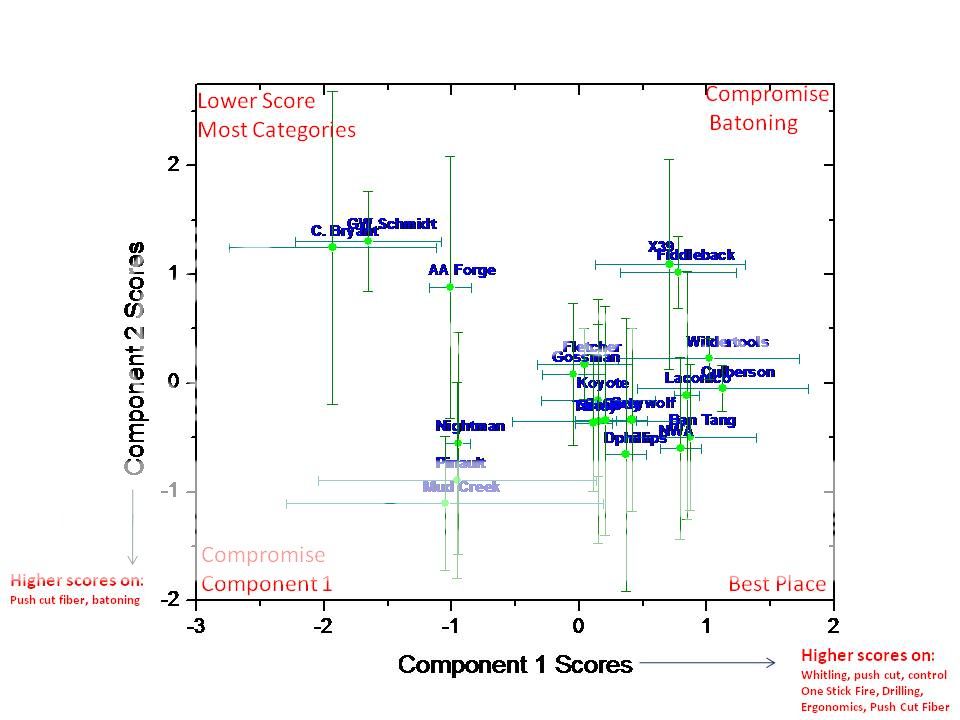

The second statistical assessment I performed was more complicated but also (I think) interesting. I performed a multivariate analysis test called principle components analysis using the testing scores across all categories. This analysis is a type of statistical approach referred to as a data reduction method. Essentially what it does is finds correlations between the different types of tests across the different knives and lumps this variation into a reduced set of test metrics. The simplest analogy is that Marcelo lumped all the tests into a single test metric called performance which was the sum of all test scores. In the PCA, we are reducing the number metrics overall and limiting it to two or three testing metrics instead of just one as in the case of Marcelo's test results. This allows separation of knives into groups that perform similarly across a series of tests. Essentially, it allows us to examine if there are patterns present. For example, do knives that behave very well in a couple of testing categories show compromise in other testing categories?

After running the principle components analysis, I found that 3 significant components came out. We will call each of these components a clumping of similar (correlated) test outcomes across the knives. The first component had the highest number of tests correlating to this axis. Basically, individual knives that show high scores on component 1 tended to receive higher test scores in the following metrics: Whittling, Push Cutting, Control, One Stick Fire, Drilling, Ergonomics, Push Cuts on Fiber. They also tended to do well (but not as strongly correlated) for Fit & Finish, Edge Retention and Sheath. Some of these correlations make sense. For example, a knife maker who pays attention to fit and finish is likely to also produce a fine looking sheath. Similarly, we find many performance aspects related to wood work and cutting correlated here. The second component only had 2 tests that corrleated to this axis. This included moderate negative correlations for Push Cut Fiber and Batoning. This means that knives with a low score (or high negative value) on this axis tended to perform better for push cut Fiber and batoning. The third test had only one variable associated with it and that was sharpening.

Okay so the above might be a bit complicated. Let me explain the graph below since visually this makes more sense and demonstrates the power of this kind of statistical analysis. I plotted the average scores across the reviewers for each knive in two dimensional space focusing on component 1 and component 2 scores. Each dot on the graph represents a specific knife and the label corresponds to its maker. The error bars plot the variation around the knife scores against he x or y- axis. Knives that have large error bars basically mean disagreement between reviewers on the absolute position of the knife. Knives that fall closer to the right bottom quadrant are the ones that test highest across multiple testing metrics. Knives that fall in the upper left tended to have lower scores across all the components (except for sharpening or food prep which is not represented here).

We see from the above that there are different clumps in this 2-dimensional space and the straight forward assessment of

Who Wins is not quite as easy to judge. Culberson still wins on the component 1 metrics followed very closely by Wildertools. However, if you were somebody who really valued the fiber push cutting test + batoning, you might be more orientated to suggest NWA and Ban Tang as the contest winners.

Truly, considering the error on the x-axis which represents a wide range of test metrics, it is useful to note the proximity of many knives found within what could be construed as the "winners circle". These include: NWA, Ban Tang, Laconico, Fiddleback and X39. These latter five could be construed as having very high scores on the X-axis. Note that X39 and Fiddleback, exhibited some compromises on the 2nd component - that relates to slightly weaker scores for batoning + push cut fiber.

Expanding this out and trying to be objective, I would say it is very difficult with any degree of confidence to truly distinguish many of the contest knives. More broadly, knives performing very well across multiple metrics include: Culberson, Wildertool, Ban Tang, Laconico, NWA, Fiddleback, X39, Grewywolf, D Phillips, C. Cody, Scout, Koyote, Turley, Fletcher and Gossman. (The proper statisical test to examine this would be cononical covariates analysis, but we don't have nearly enough reviewers to objectively perform such a test). We then see two lower ranked groups of knives. The mid-ranged knives include: Nightman, Pinault and Mud Creek). The Lower ranked group is C. Bryant, GW Schmidt and AA Forge fits somewhere between these two. I note specifically that in the latter groups, error bars tend to get wide which means there was considerable disagreement between reviewers on these knives. Particularly the case for C. Bryant and AA Forge on the 'batoning/push cut fiber' tests there seems to be wide disagreement (see also the correlation tests noted above).

So overall, these tests give us a slightly expanded picture compared to the average sum of scores presented by Marcelo in his test outcomes breakdown. Personally, I was expecting that fit and finish testing categories would clump out independently relative to the performance metrics but I was wrong in this assertion. I was also hoping that the analysis would show more wider separation of test metrics across component axes but instead found that one axis explained many of the test results. I also would like to say that knive makers were not as far apart from each other as perhaps the average total scores might suggest. The winners in this case were not so far ahead of the pack as the additive scores might make them out to be. This isn't of course to take anything away from Culberson and Wildertools. Its only to say that many of the makers performed very well and its up to relatively nuanced judgements of a very small set of reviewers relative to knife entries trying to figure out who won.

Hope you guys enjoyed this little diddy of statistics fun. Now before I hear somebody saying that statistics can be made to say anything, let me just say that I wasn't looking for an answer here. I just wanted the patterns to speak for themselves. In this regard, the stats told me quite a bit and new information that can be quite difficult to deduce by simply staring at a table of numbers.

Ken